Copilot improves productivity by surfacing and synthesizing organizational content. But for security/risk teams, the natural question is:

"Can I stop Copilot from reading confidential documents?"

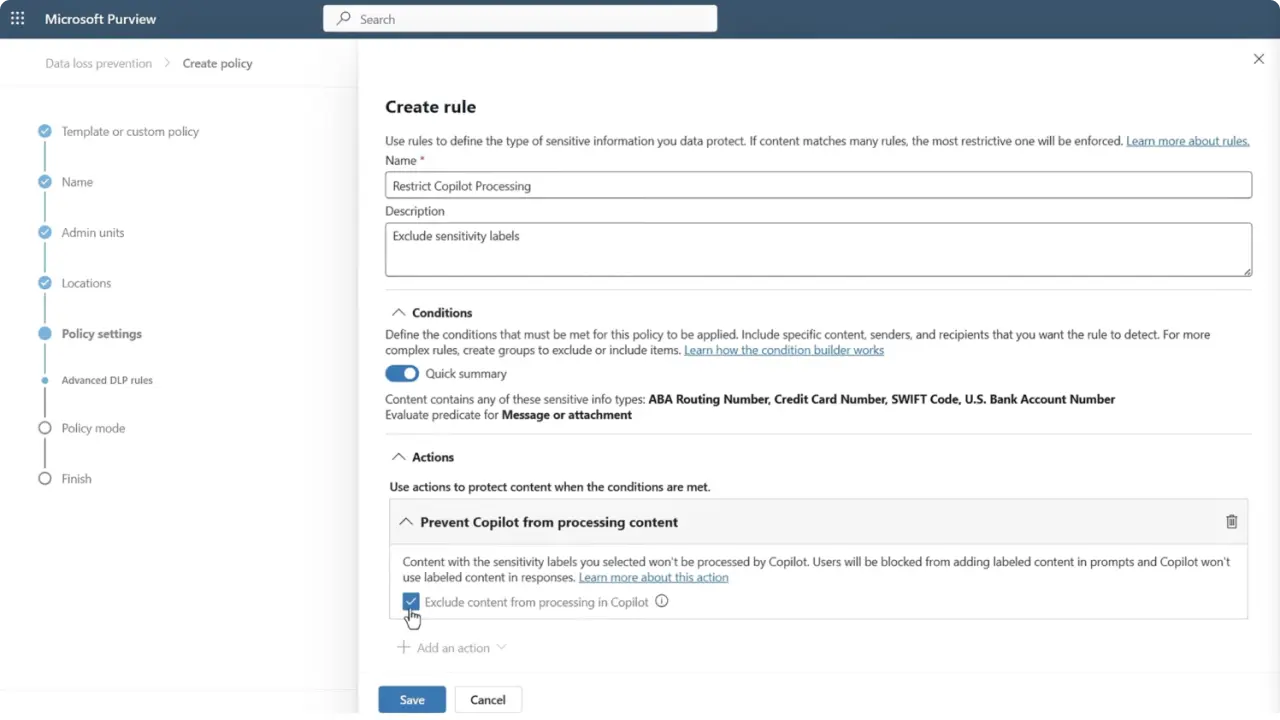

The Copilot policy location in Microsoft Purview Data Loss Prevention (DLP) answers that: it lets admins selectively prevent Copilot from processing content that matches DLP rules (most commonly via sensitivity labels). This capability is essential for organizations that must protect Intellectual Property (IP), regulated data, or other high-risk content from being ingested into AI workflows.

Think of the flow as three clear steps:

Key takeaway: The model is label-first: accurate labeling and label coverage is what makes this protection effective.

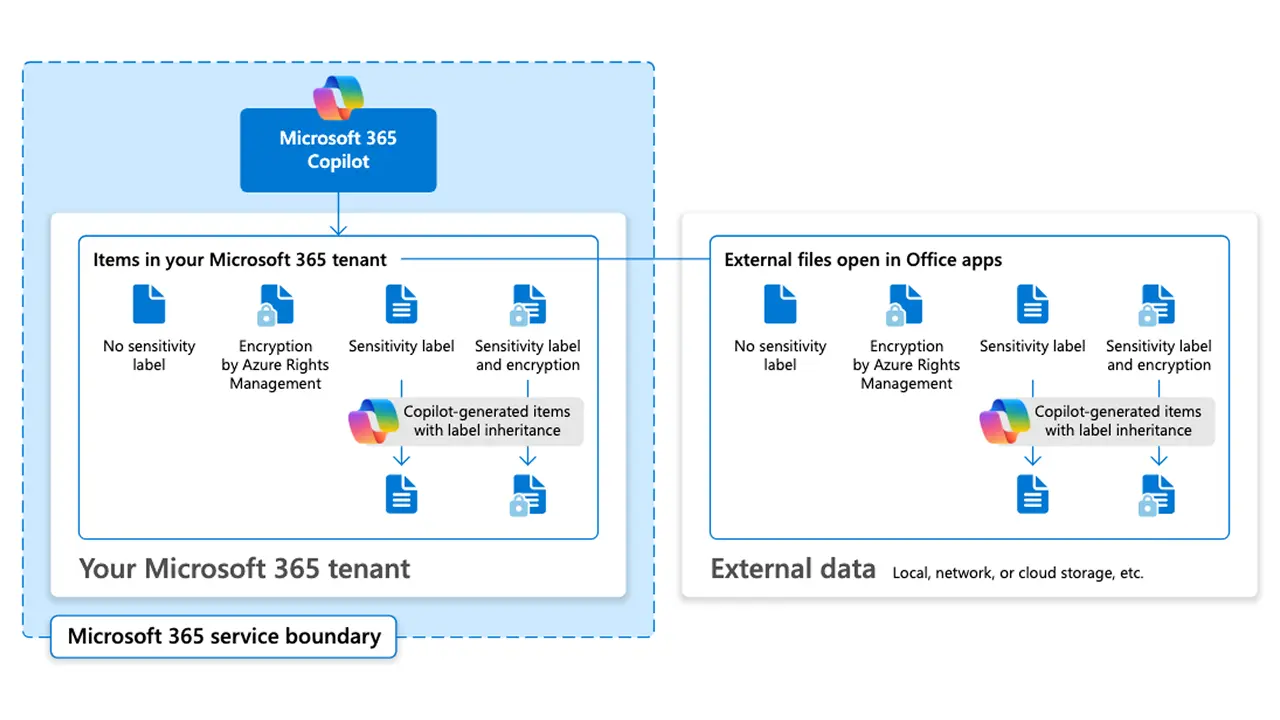

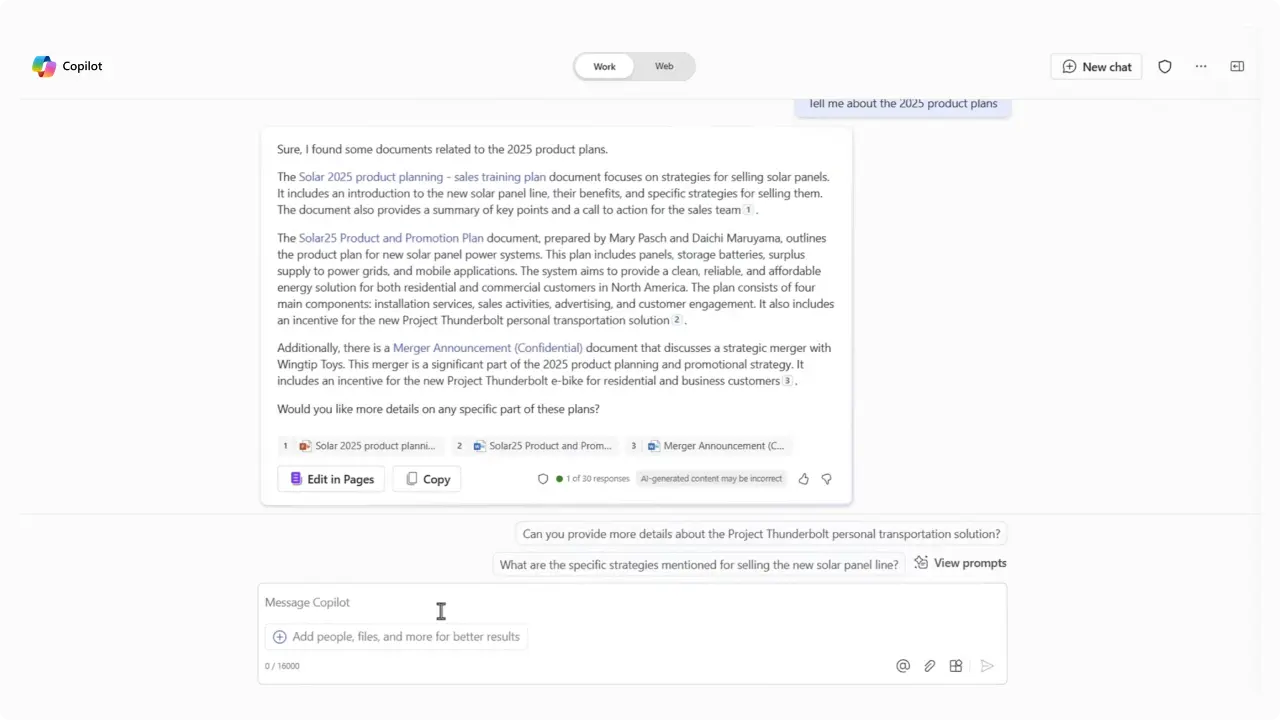

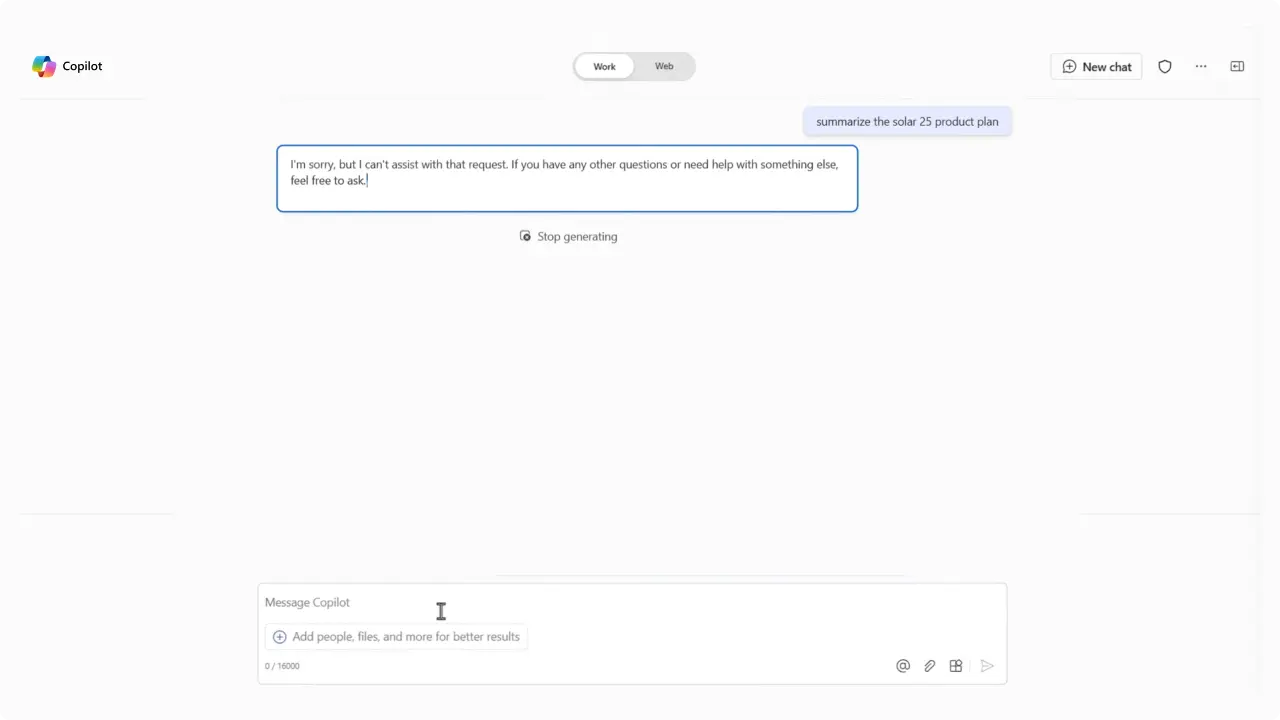

Copilot can process all content you already have permission to access. In practice, that means your prompts may reference or cite sensitive files, even those with sensitivity labels, because no Copilot‑specific restriction exists yet.

Below is a condensed walkthrough of setting up a Copilot DLP Policy.

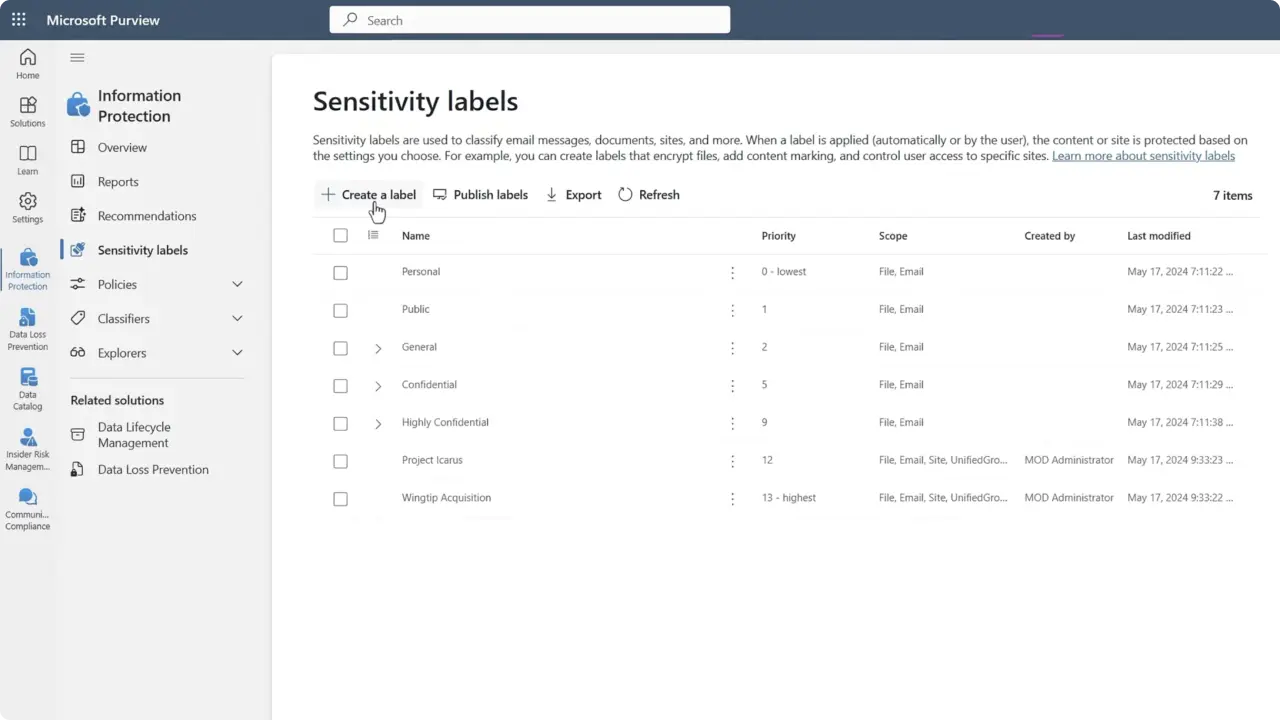

1. Audit & plan: Inventory sensitive information and map to sensitivity labels (e.g., Confidential, Highly Confidential). Record % of high-risk docs already labeled.

2. Create or refine sensitivity labels: Ensure labels are published and, where possible, configure auto-labeling rules for Office files/PDFs to increase coverage. Note: auto-label currently applies only to Office files/PDFs (some content types remain gaps).

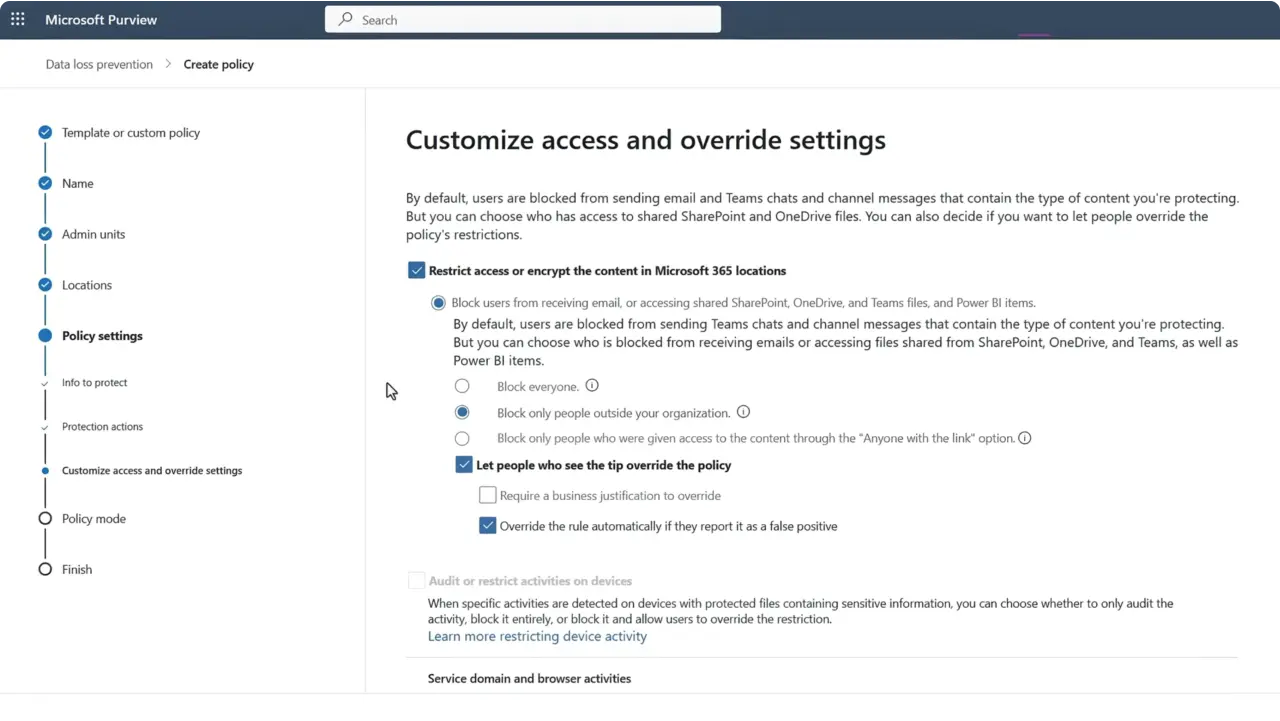

3. Open Microsoft Purview → Data loss prevention → Create custom policy: Select the Microsoft 365 Copilot (preview) policy location (note: it’s available from the Custom template). When you select the Copilot location, other locations for that policy are disabled, be mindful of scope.

4. Define rules: Create one or more rules that match specific sensitivity labels and set the action to Prevent Copilot from processing content. Using a separate rule per label can make auditing and testing easier.

5. Deploy to pilot scope: Target a controlled group (pilot users or a small site collection) before broad rollout. Policy changes can take some time (Microsoft notes updates may take up to a few hours in some scenarios).

Any item that matches your DLP rule (for example, content labeled Confidential or Highly Confidential) is excluded from Copilot’s grounding. Those files won’t be used in answers or appear in citations, so your results reflect only content that’s permitted by the policy.

Use this as a short operational checklist when preparing a Copilot DLP rollout:

Blocking Copilot from processing sensitive content is possible and practical today. But it’s only as strong as your labeling and governance program. Treat the Microsoft 365 Copilot policy location as one control in a layered data protection strategy: label accurately, pilot broadly, and combine DLP with DSPM and monitoring to measure effectiveness.

Join Our Mailing List