Generative AI and agents are reshaping work, but they also amplify governance gaps. Microsoft’s recent guidance makes one point very clearly: Copilot and agents only work with the data they can access, so any over-permissioned sites, broken inheritance, or unlabeled sensitive files become risks multiplied. In short, uncontrolled sharing is the single biggest operational exposure when you introduce AI that can summarize, synthesize, and surface content across your tenant.

Most internal oversharing is not malicious; it’s a byproduct of convenience and historical configuration decisions. When sites are set to broad privacy settings, default sharing is left open, or groups inherit permissions that no longer make sense, Copilot and agent features can inadvertently surface information to people who should not see it. Because Copilot can synthesize data from across sites, the consequence of one misconfigured location is greater than before: a single overshared repository can ripple through summaries, answers, and agent results. That means governing Microsoft 365 Copilot isn’t just about protecting AI it starts with tight access governance across SharePoint, Teams, OneDrive, and related stores.

Microsoft recommends treating Copilot adoption as a staged program: begin with a pilot, scale through a controlled deployment, and then move to steady operation. Pilots let you expose Copilot to a small set of users and a limited number of low-risk sites so you can surface permission issues and tune policies before broad rollout. The deploy phase is where remediation happens at scale increasing site privacy, applying sensitivity labels, and locking down excessive access and the operate phase focuses on continuous monitoring, automated policies, and incremental improvement. Following this blueprint helps teams balance innovation with risk control as AI takes on more workplace responsibilities.

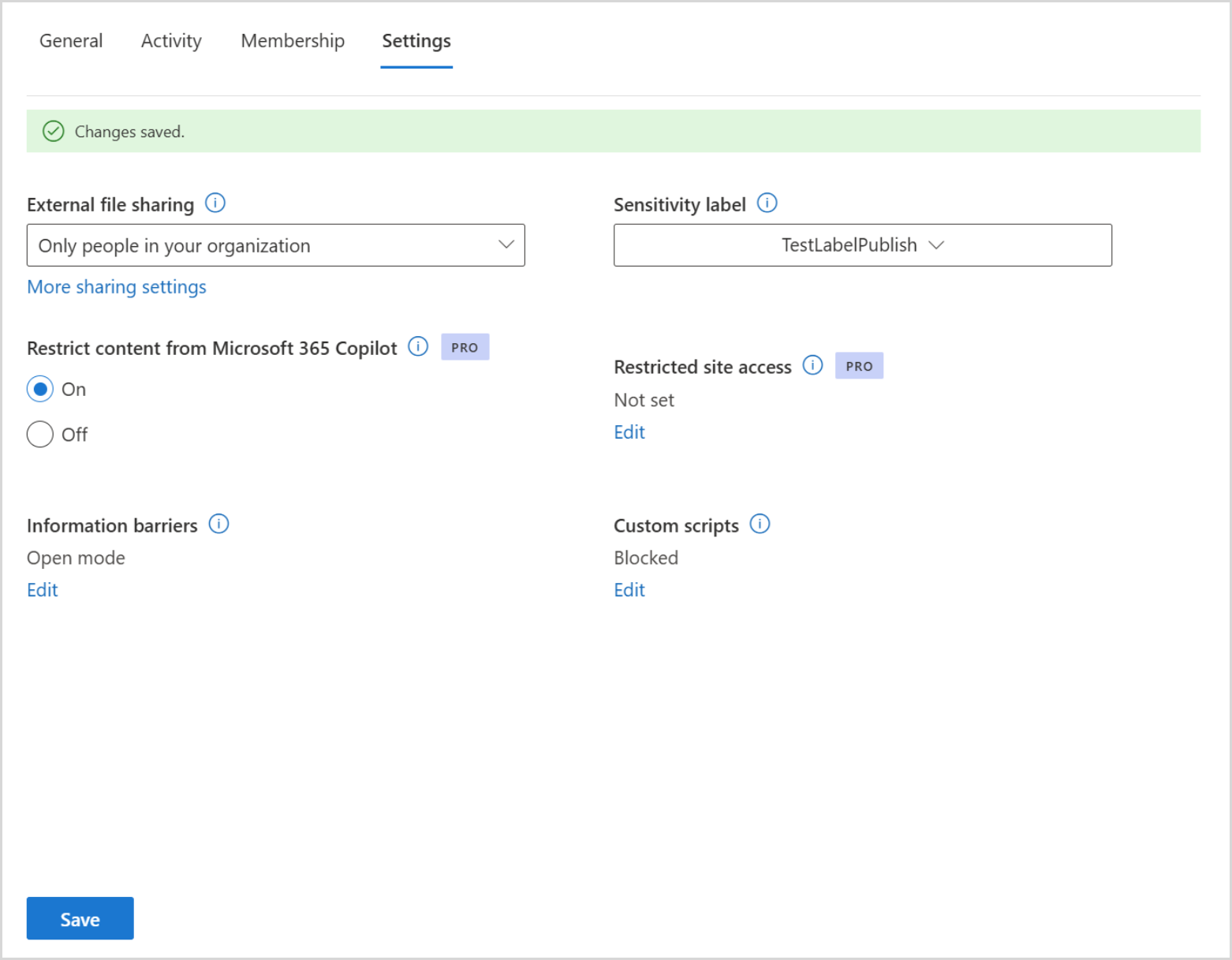

SharePoint is often the heart of file collaboration for an organization, and for that reason it directly shapes what Copilot and agents can surface. SharePoint Advanced Management provides a practical way to assess and clean up sprawling site estates before you widen Copilot access. The tool surfaces misconfigurations, inactive or ownerless sites, and permission anomalies in a single dashboard so teams can prioritize remediation. It also supports site lifecycle actions such as archiving or setting read-only states for stale content, which reduces the attack surface before AI can touch that data. When admins need an immediate containment option, Restricted Content Discovery and Restricted Access give a blunt but effective way to stop Copilot from searching or processing specific sites while remediation proceeds. These controls let security and IT teams act fast without breaking business continuity.

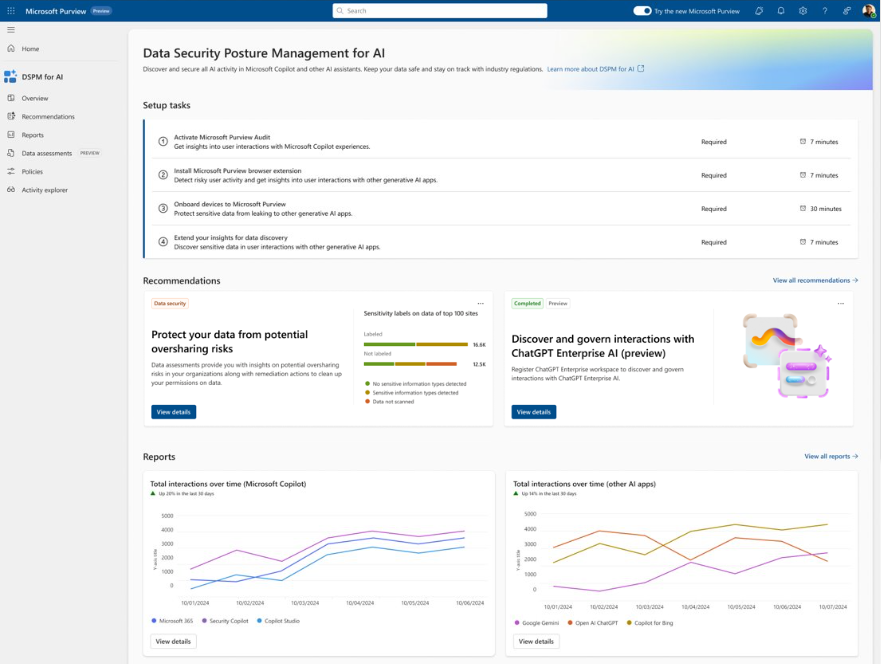

Cleaning up SharePoint is necessary, but it is only one part of a broader data posture. Microsoft Purview’s Data Security Posture Management for AI helps teams scale protections across the entire Microsoft 365 estate. DSPM provides visibility into how Copilot and agents are used, surfaces data risk assessments, and ships ready-to-use policies to prevent data loss. The built-in Data Risk Assessments run by default against the top active sites and flag issues such as unlabeled sensitive files, overexposed sharing links, and unusual access patterns. Those assessments are designed to give a prioritized view of where oversharing is most likely to impact AI outputs, so remediation work is targeted and efficient rather than scattershot.

Identifying oversharing is only half the job; the other half is remediation that balances security with business needs. Purview allows organizations to create DLP policies that explicitly block Copilot and agent access to files or emails with certain sensitivity labels, and auto-labeling can reduce the manual burden by applying protections to unlabeled content. When a site is high risk and needs an immediate stopgap, Restricted Content Discovery can block Copilot from indexing the site even if users still have access. Lifecycle policies can automatically sweep out stale files so that outdated or irrelevant content does not pollute AI responses. The combination of targeted blocking, auto-labeling, and lifecycle cleanup helps maintain productivity while ensuring that the data feeding AI remains compliant and safe.

Long term governance depends on sensible delegation. Centralized control can stall business teams, so Microsoft’s guidance emphasizes roles and delegation models that let site owners and business unit leaders own remediation actions without overloading IT. Site access reviews can be triggered to prompt owners to confirm who should retain access, and operational dashboards can show where corrective actions are outstanding. The goal is not to lock everything down permanently but to create a repeatable governance rhythm that prevents regression as people create new sites and share new files. This distributed model keeps the environment manageable at scale while holding teams accountable.

A successful program reduces the number of overshared sites, lowers the volume of unlabeled sensitive files, and narrows the set of items that Copilot and agents can reference without explicit protection. It shows up as fewer surprises in Copilot outputs, more precise and safer AI summaries, and a clear audit trail for any high-risk access or activity. Importantly, success also includes minimal business disruption: users retain the collaboration patterns they need while sensitive content is correctly protected. That balance is what turns data from a liability into a guided asset for trustworthy AI.

Start by running an assessment of your SharePoint estate and the sites that Copilot would surface in pilot scenarios. Use DSPM to get a prioritized list of risks and apply auto-labeling and DLP where possible to close fast. If you find high-risk or high-value sites, use Restricted Content Discovery to prevent them from being processed by Copilot while you remediate. Finally, put in place a cadence of access reviews and lifecycle cleanup so the environment does not regress as adoption grows. Taken together, these steps give you a practical, repeatable path to govern Microsoft 365 Copilot without stifling innovation.

Join Our Newsletter