Generative AI and agentic workflows are moving fast from experiments to production pilots.

Organizations are already embedding LLM-powered assistants into business apps, but the hard part is safely connecting those assistants to real systems (APIs, databases, business logic) without rebuilding everything.

The Model Context Protocol (MCP) and Azure AI Foundry together offer a practical, low-invasion path: host a small MCP server (for example, a FastAPI app on Azure App Service) that exposes a curated set of tools and prompts, and register that server with an Azure AI Foundry agent so the agent can discover and call your app’s capabilities.

This pattern lets teams add conversational automation to legacy apps quickly while keeping control over security, auditing, and governance.

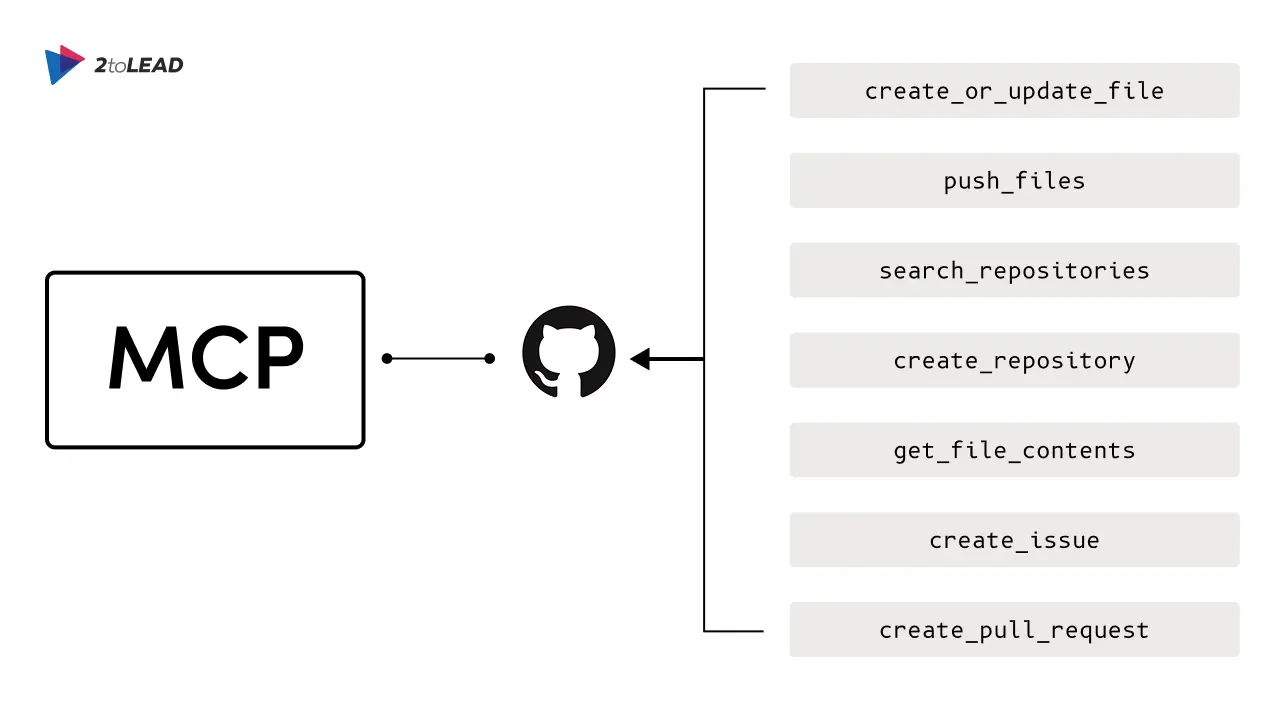

MCP is an open protocol that standardizes how applications expose context, prompts, resources, and executable tools to LLM-based clients (agents).

Instead of each agent implementation inventing its own adapter to talk to services, MCP defines a predictable JSON-RPC-like surface so agents can auto-discover tools, prompting metadata, and call tool endpoints in a consistent way.

That makes it far easier to plug agent platforms (like Azure AI Foundry) into real apps and services.

For developer teams, MCP reduces custom glue code, enforces clear contracts for tool invocation, and enables reuse across different agents and LLM providers.

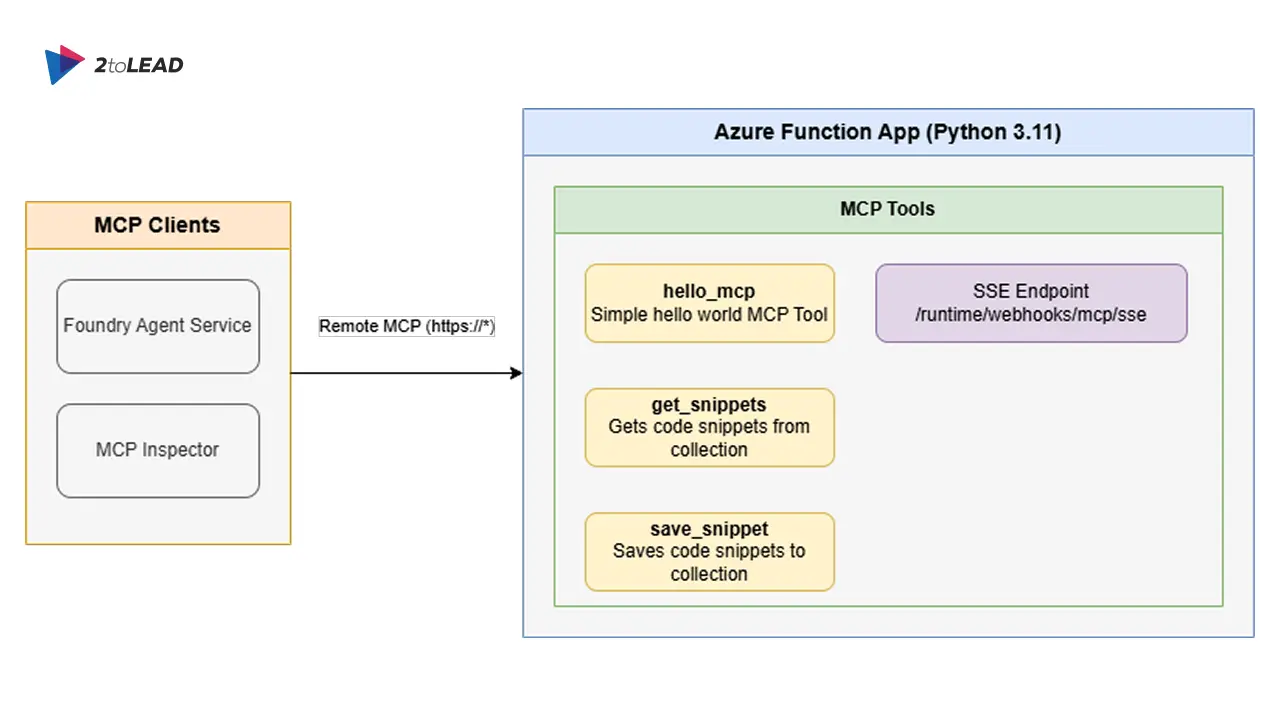

Azure AI Foundry supports registering remote MCP server endpoints as tools for agents.

In practice that means an operator or developer can point a Foundry agent at an MCP server URL and server label; the agent will then discover the available tools and be able to invoke them as part of its reasoning and action flow.

Microsoft documents this integration (how-to and configuration patterns) and ships sample code to help teams get started.

This capability gives platform teams an off-ramp for adding agent-driven automation without embedding LLMs directly inside every app.

Here’s a concise, practical architecture and implementation pattern you can use to turn a legacy App Service app into an agent-enabled system.

Agent (Azure AI Foundry) ⇄ MCP Server (FastAPI) on App Service ⇄ Legacy app / API / DB

FastAPI is lightweight, async-friendly, and easy to deploy; App Service supports Python web apps and provides managed TLS, autoscaling, and CI/CD integration.

It is ideal for a small MCP server that surfaces controlled capabilities to agents. Microsoft’s sample repo demonstrates this exact blueprint and shows both local testing and azd-based deployment steps.

azd or your CI/CD pipeline to deploy the FastAPI app to App Service. App Service offers managed TLS and networking options (VNet integration, private endpoints) that you can use to lock down traffic. server_label and server_url. Foundry will then list that server’s tools in the agent UI and use them during conversations.When you expose runtime capabilities to an autonomous agent, you must assume the agent may propose many different tool calls. Follow these defensive patterns:

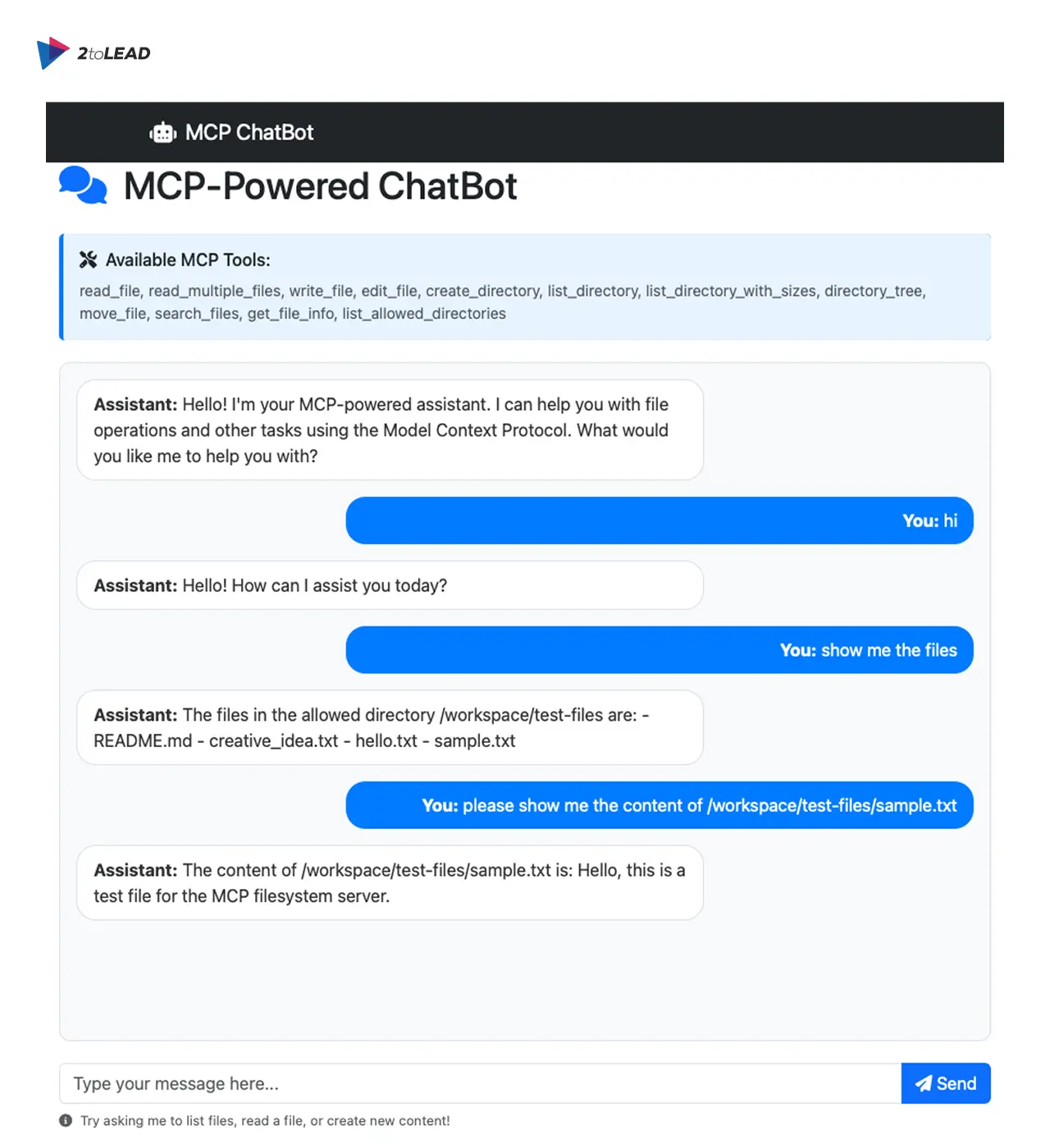

Microsoft published a hands-on demo that walks the workflow end-to-end: a FastAPI-based MCP server deployed to App Service, wired to Azure AI Foundry agents, and shown in a chat UI where the agent calls MCP tools to operate on sample to-do items.

That example is an excellent starting point for teams wanting to prototype quickly and iterate on security and UX.

Use it as a sandbox: clone the repo, experiment locally, and then try azd up to deploy into a test subscription.

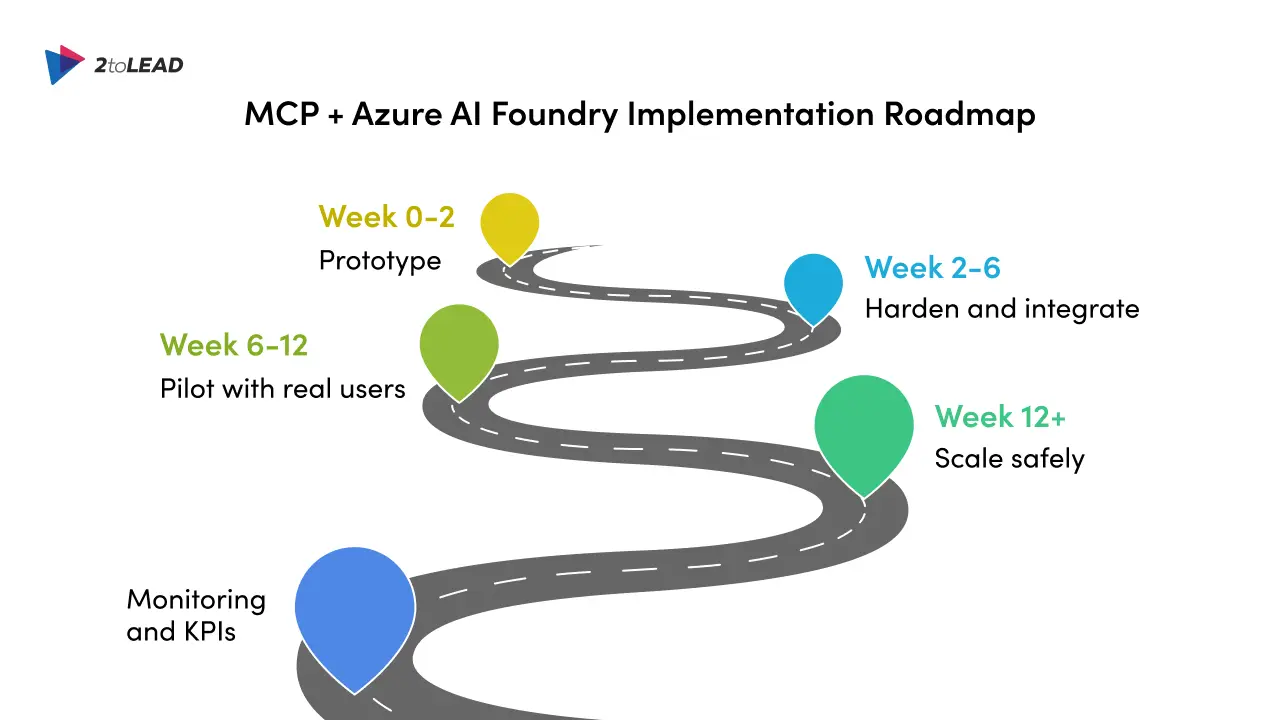

From a business perspective, MCP + Foundry can materially shorten time-to-pilot for agentic features:

However, remember the “gen-AI paradox”: many companies adopt gen AI quickly, but achieving measurable bottom-line impact requires careful change management, instrumentation, and governance.

Use pilots to define clear KPIs (task completion rate, time saved, error reduction) and instrument them from day one.

Weeks 0–2: Prototype

Weeks 2–6: Harden & integrate

Weeks 6–12: Pilot with real users

Weeks 12+: Scale safely

Monitoring & KPIs

If you want to add conversational automation to your existing App Service apps without a full rewrite, the MCP + Azure AI Foundry pattern is a pragmatic, security-first approach.

Build a small MCP adapter (FastAPI), expose narrow tools, enforce strict auth and observability, and register the server with Foundry. Use Microsoft’s official samples and docs to accelerate a prototype, then harden and scale with governance and monitoring in place.

The result: agent-driven UX and automation with a centralized, auditable control surface.

Want expert guidance to make it happen? Reach out to our specialists at 2toLead. Our team can help you design, implement, and optimize your MCP + Azure AI Foundry integration so you can unlock the full potential of agentic automation safely and efficiently.

Join Our Newsletter